When optimising something, you generally you want as few things as possible competing for the scarce resources. This is pretty routine in the server world, which is why it surprises me that I haven’t seen anyone talking about this. So let’s fix that now.

Table of contents

- Table of contents

- Corrections and updates

- TL;DR

- My setup

- Steps

- Close apps

- SSH into the computer

- Check the current state

- Stop the GUI

- Check the state again

- Disable –lowvram

- Start the Automatic1111 webui

- Make the port accessible to other devices

- Results

- Why?

- What about Windows and MacOS

- If this isn’t viable for you

- Another technique

- Wrapping up

Corrections and updates

- 2023-08-27 01:24: In the video I mentioned getting 4-5x the performance. I was wrong. It’s 3x. Still very worthwhile, but worth correcting.

- 2023-08-28 13:49: Added note about, and link to a video that experiments with

--medvramfor SDLX, and my subsequent experimentation with that.

TL;DR

Stopping the GUI frees up enough VRAM to make a meaningful difference to the performance of StableDiffusion:

- Stop the GUI with something like

sudo systemctl stop display-manager. - Make sure that the Automatic1111 webui starts without the

--lowvramoption enabled. - Connect to it remotely.

My setup

- GTX 970 4GB.

- 32GB system RAM.

- AMD FX(tm)-8350 Eight-Core Processor @ 4 - 4.2Ghz.

Steps

Close apps

Close any apps that you have running and don’t want to loose data in. Basically what ever you’d do before shutting down/rebooting the machine.

SSH into the computer

SSH to the computer from another machine. This asserts that you comfortably have what you need to continue the next steps.

Check the current state

Run nvidia-smi to see what the GPU looks like at the moment. On my system, I see about 700MB used out of 4096MB (4GB).

Stop the GUI

This step may vary between different distributions, so you may need to google it. But on my OpenSUSE system, it’s sudo systemctl stop display-manager

Check the state again

Run nvidia-smi again, and you should now see that there is 0 VRAM usage.

Disable –lowvram

Edit your startup script that calls webui.sh (or webui.sh itself if you modified it).

Find --lowvram and remove it.

Start the Automatic1111 webui

Start up the webui as you would normally. If you normally add --lowvram here, don’t.

Make the port accessible to other devices

SSH method

You need to be able to access the port so that you can access the webui from another machine. I’ve mostly been using ssh -L like this:

ssh hostname -vL 7860:localhost:7860Where hostname is the machine that is running the service that you want to connect to. Note that you don’t need to change localhost.

You will then connect to http://localhost:7860 in the browser on the machine that you are connecting from (ie the computer you SSH’d from).

Reverse proxy method

If you’d like to access it on other devices, like your phone, you can do so by setting up a reverse proxy with a tool like nginx, or socat.

Here’s a quick command that you can use to run socat:

socat -d TCP4-LISTEN:7861,fork TCP4:localhost:7860Make sure to open port 7861 on your firewall.

You will then connect to http://hostname:7861 in the browser on the machine that you are connecting from (ie the computer you SSH’d from).

Make sure to change hostname to the name or IP address of the machine that is running the service.

Results

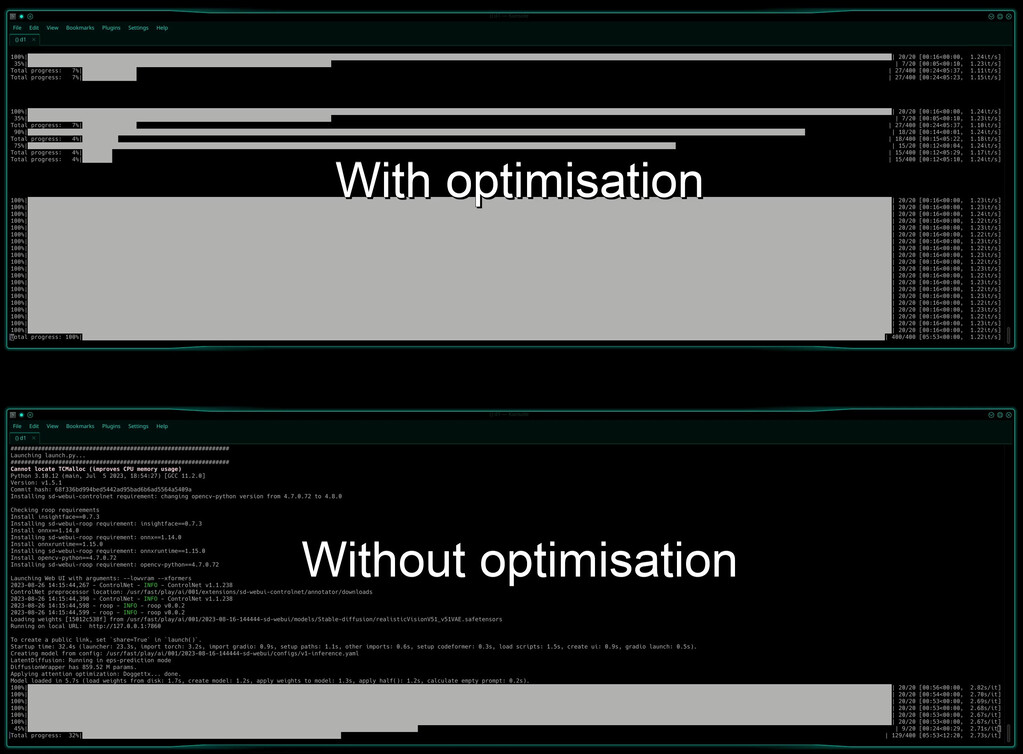

Above: Two screenshots of runs in action.

- Before: 00:52s-00:56s per 20 step image. -> 2.73s/it.

- After: 00:16s per 20 step image. 1.22s/it.

Update: Interestingly, a day after I published this article, I came across this video where someone went from no manual VRAM optimisations to using --medvram to get an 11x speed boost with SDXL. They have more VRAM than I do, and they are doing it specifically with SDXL, which I am not. Regardless, I was curious to see how --medvram would work for my setup. The answer is that it is close enough to same, sometimes a second slower than without --medvram. It works a lot of the time without OOMing. This surprised me, because --medvram used OOM almost instantly for me a few months ago. So the fact that it works at all now is excellent progress.

Why?

By freeing up this VRAM, there is enough space to no longer need --lowvram for most use-cases. --lowvram invokes optimisations that prioritise using less memory, at the expense of processing time.

What about Windows and MacOS

I don’t know.

On Windows you might be able to get close to the same results by running Automatic1111 as a Windows service and then logging out. There will probably be several challenges to solve in doing this, and I don’t think that it will get down to 0 usage, but I suspect that you will still free up enough VRAM to be worth pursuing.

For MacOS, everything I want to say is based on assumptions on how things used to work, that may not be true now. It would be worth researching further to see what is possible.

If this isn’t viable for you

Generally speaking, even if you can’t use this technique, you want to remove as much competition for the GPU VRAM as possible. Close absolutely every window you can. Even if it’s only a calculator, it’s still eating VRAM.

Another technique

Another way of achieving this is to have 2 GPUs in your system. Dedicate one of them to running the GUI and applications, and one to running stable diffusion.

Wrapping up

When I first started using Automatic1111, --lowvram was absolutely essential to get it to work at all. The stable diffusion community is hard at work, and things are improving rapidly. So it’s been really interesting to see just how functional it is now without --lowvram, and how it’s able to gracefully degrade in many cases. There are still enough cases where it doesn’t gracefully degrade that I wouldn’t want to run it without --lowvram if I haven’t freed up VRAM first. But the progress is impressive none-the-less.

This blog post is about Automatic1111, but the method is applicable to any other AI service.

Have fun.